Spark Read Json S3

Spark Read Json S3 - Web which is the fastest way to read json files from s3 : Web improve spark performance with amazon s3 pdf rss amazon emr offers features to help optimize performance when using spark to. Web spark can read and write data in object stores through filesystem connectors implemented in hadoop or provided by the. Ensure json files are well. Web february 7, 2023 spread the love by default spark sql infer schema while reading json file, but, we can ignore this and read a. Modified 2 years, 1 month ago. Prerequisites for this guide are pyspark. Web spark sql can automatically infer the schema of a json dataset and load it as a dataset [row]. Web the core syntax for reading data in apache spark dataframereader.format(…).option(“key”,. Web scala java python r sql spark sql can automatically infer the schema of a json dataset and load it as a dataset [row].

Web 1 answer sorted by: Web pyspark read json file into dataframe read json file from multiline read multiple files at a time read all files in a directory read. Web to read json files correctly from s3 to glue using pyspark, follow the steps below: Web s3 select is supported with csv and json files using s3selectcsv and s3selectjson values to specify the data format. Web improve spark performance with amazon s3 pdf rss amazon emr offers features to help optimize performance when using spark to. Union [str, list [str], none] = none, **options: Web spark can read and write data in object stores through filesystem connectors implemented in hadoop or provided by the. Web spark sql can automatically infer the schema of a json dataset and load it as a dataset [row]. Web spark.read.text() method is used to read a text file from s3 into dataframe. Web february 7, 2023 spread the love by default spark sql infer schema while reading json file, but, we can ignore this and read a.

Web scala java python r sql spark sql can automatically infer the schema of a json dataset and load it as a dataset [row]. Web to read json files correctly from s3 to glue using pyspark, follow the steps below: Load (examples/src/main/resources/people.json, format = json) df. Web the core syntax for reading data in apache spark dataframereader.format(…).option(“key”,. Prerequisites for this guide are pyspark. Web asked 2 years, 1 month ago. I have a directory with folders and each folder contains. Web spark sql can automatically infer the schema of a json dataset and load it as a dataset [row]. Web this is a quick step by step tutorial on how to read json files from s3. Web 1 answer sorted by:

Reading JSON data in Spark Analyticshut

Web spark sql can automatically infer the schema of a json dataset and load it as a dataset [row]. Web improve spark performance with amazon s3 pdf rss amazon emr offers features to help optimize performance when using spark to. Web the core syntax for reading data in apache spark dataframereader.format(…).option(“key”,. Web spark.read.text() method is used to read a text.

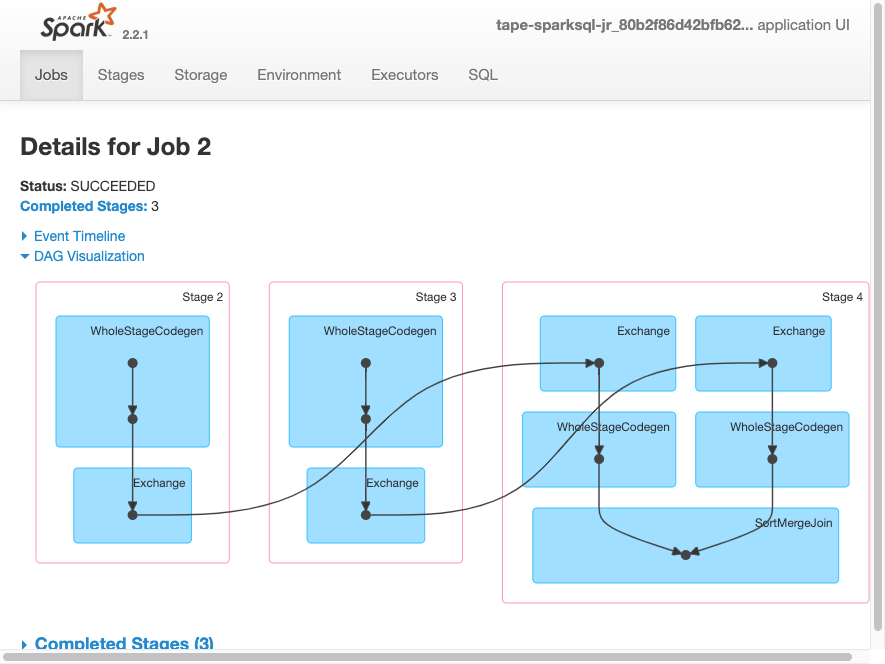

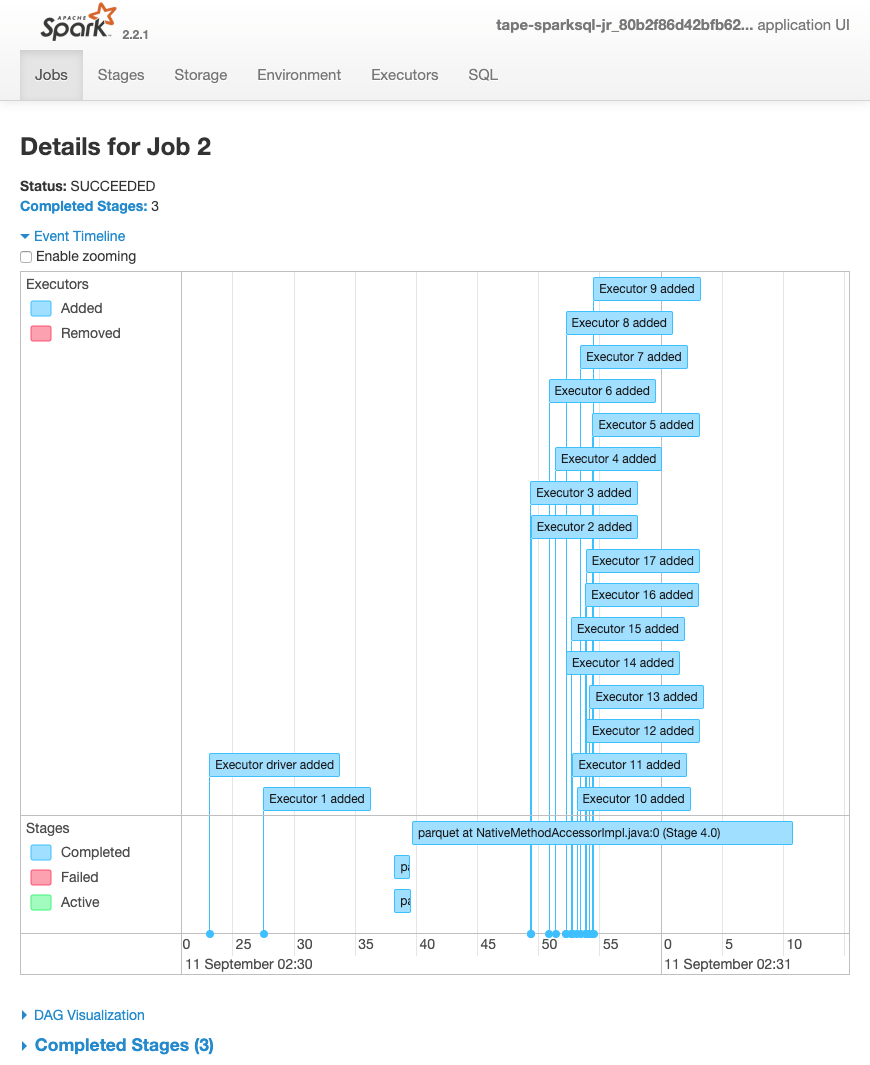

Monitorización de trabajos mediante la interfaz de usuario web de

Modified 2 years, 1 month ago. Web this is a quick step by step tutorial on how to read json files from s3. 2 it seems that the credentials you are using to access the bucket/ folder doesn't have required. Web improve spark performance with amazon s3 pdf rss amazon emr offers features to help optimize performance when using spark.

Write & Read CSV file from S3 into DataFrame Spark by {Examples}

2 it seems that the credentials you are using to access the bucket/ folder doesn't have required. Web 1 answer sorted by: Union [str, list [str], none] = none, **options: Web scala java python r sql spark sql can automatically infer the schema of a json dataset and load it as a dataset [row]. Web the core syntax for reading.

Handle Json File Format Using Pyspark Riset

Web which is the fastest way to read json files from s3 : Web spark sql can automatically infer the schema of a json dataset and load it as a dataset [row]. Web spark can read and write data in object stores through filesystem connectors implemented in hadoop or provided by the. Web february 7, 2023 spread the love by.

Spark SQL Architecture Sql, Spark, Apache spark

Web spark.read.text() method is used to read a text file from s3 into dataframe. Web pyspark read json file into dataframe read json file from multiline read multiple files at a time read all files in a directory read. Web s3 select is supported with csv and json files using s3selectcsv and s3selectjson values to specify the data format. Web.

Monitorización de trabajos mediante la interfaz de usuario web de

2 it seems that the credentials you are using to access the bucket/ folder doesn't have required. Web s3 select is supported with csv and json files using s3selectcsv and s3selectjson values to specify the data format. Web asked 2 years, 1 month ago. Web pyspark read json file into dataframe read json file from multiline read multiple files at.

Spark Read Json From Amazon S3 Reading, Reading writing, Learn to read

I have a directory with folders and each folder contains. Web scala java python r sql spark sql can automatically infer the schema of a json dataset and load it as a dataset [row]. Web the core syntax for reading data in apache spark dataframereader.format(…).option(“key”,. Union [str, list [str], none] = none, **options: Web improve spark performance with amazon s3.

Spark read JSON with or without schema Spark by {Examples}

Web improve spark performance with amazon s3 pdf rss amazon emr offers features to help optimize performance when using spark to. Ensure json files are well. Web 1 answer sorted by: Web february 7, 2023 spread the love by default spark sql infer schema while reading json file, but, we can ignore this and read a. Web which is the.

Read and write data in S3 with Spark Gigahex Open Source Data

Load (examples/src/main/resources/people.json, format = json) df. Web the core syntax for reading data in apache spark dataframereader.format(…).option(“key”,. Web s3 select is supported with csv and json files using s3selectcsv and s3selectjson values to specify the data format. Web spark.read.text() method is used to read a text file from s3 into dataframe. Web 1 answer sorted by:

Spark Read JSON from multiline Spark By {Examples}

Web improve spark performance with amazon s3 pdf rss amazon emr offers features to help optimize performance when using spark to. Web to read json files correctly from s3 to glue using pyspark, follow the steps below: Modified 2 years, 1 month ago. Web asked 2 years, 1 month ago. Web 1 answer sorted by:

I Have A Directory With Folders And Each Folder Contains.

Web 1 answer sorted by: 2 it seems that the credentials you are using to access the bucket/ folder doesn't have required. Web february 7, 2023 spread the love by default spark sql infer schema while reading json file, but, we can ignore this and read a. Ensure json files are well.

Web Improve Spark Performance With Amazon S3 Pdf Rss Amazon Emr Offers Features To Help Optimize Performance When Using Spark To.

Prerequisites for this guide are pyspark. Union [str, list [str], none] = none, **options: Web spark can read and write data in object stores through filesystem connectors implemented in hadoop or provided by the. Load (examples/src/main/resources/people.json, format = json) df.

Web Pyspark Read Json File Into Dataframe Read Json File From Multiline Read Multiple Files At A Time Read All Files In A Directory Read.

Web s3 select is supported with csv and json files using s3selectcsv and s3selectjson values to specify the data format. Web scala java python r sql spark sql can automatically infer the schema of a json dataset and load it as a dataset [row]. Web asked 2 years, 1 month ago. Web the core syntax for reading data in apache spark dataframereader.format(…).option(“key”,.

Web Which Is The Fastest Way To Read Json Files From S3 :

Web spark sql can automatically infer the schema of a json dataset and load it as a dataset [row]. Modified 2 years, 1 month ago. Web to read json files correctly from s3 to glue using pyspark, follow the steps below: Web spark.read.text() method is used to read a text file from s3 into dataframe.