Read Large Parquet File Python

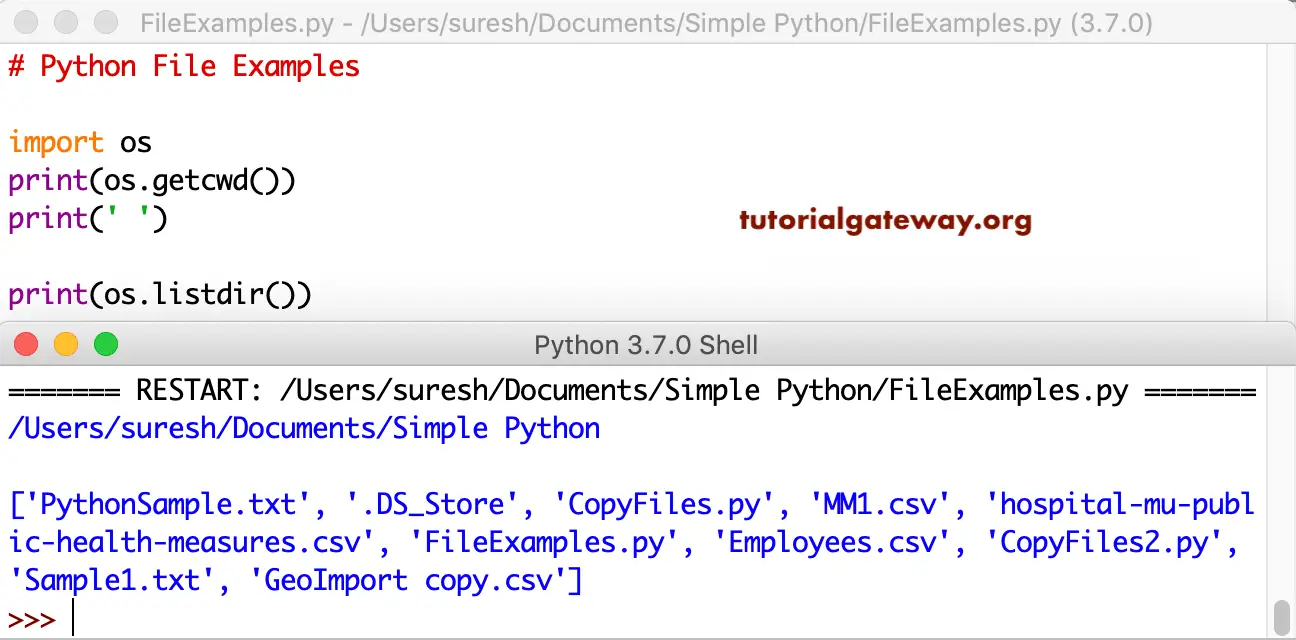

Read Large Parquet File Python - So read it using dask. Web how to read a 30g parquet file by python ask question asked 1 year, 11 months ago modified 1 year, 11 months ago viewed 530 times 1 i am trying to read data from a large parquet file of 30g. Web in general, a python file object will have the worst read performance, while a string file path or an instance of nativefile (especially memory maps) will perform the best. Reading parquet and memory mapping ¶ because parquet data needs to be decoded from the parquet. I realized that files = ['file1.parq', 'file2.parq',.] ddf = dd.read_parquet(files,. Web pd.read_parquet (chunks_*, engine=fastparquet) or if you want to read specific chunks you can try: If not none, only these columns will be read from the file. Pandas, fastparquet, pyarrow, and pyspark. This function writes the dataframe as a parquet file. Only these row groups will be read from the file.

Web write a dataframe to the binary parquet format. Web meta is releasing two versions of code llama, one geared toward producing python code and another optimized for turning natural language commands into code. Web i'm reading a larger number (100s to 1000s) of parquet files into a single dask dataframe (single machine, all local). Only read the columns required for your analysis; Import pandas as pd df = pd.read_parquet('path/to/the/parquet/files/directory') it concats everything into a single dataframe so you can convert it to a csv right after: Web import pandas as pd #import the pandas library parquet_file = 'location\to\file\example_pa.parquet' pd.read_parquet (parquet_file, engine='pyarrow') this is what the output. Web in this article, i will demonstrate how to write data to parquet files in python using four different libraries: In particular, you will learn how to: Web i encountered a problem with runtime from my code. If you don’t have python.

You can choose different parquet backends, and have the option of compression. Only read the columns required for your analysis; Web i encountered a problem with runtime from my code. Web in this article, i will demonstrate how to write data to parquet files in python using four different libraries: In our scenario, we can translate. Columnslist, default=none if not none, only these columns will be read from the file. Web meta is releasing two versions of code llama, one geared toward producing python code and another optimized for turning natural language commands into code. Import pandas as pd df = pd.read_parquet('path/to/the/parquet/files/directory') it concats everything into a single dataframe so you can convert it to a csv right after: Import dask.dataframe as dd from dask import delayed from fastparquet import parquetfile import glob files = glob.glob('data/*.parquet') @delayed def. Web so you can read multiple parquet files like this:

kn_example_python_read_parquet_file_2021 — NodePit

I realized that files = ['file1.parq', 'file2.parq',.] ddf = dd.read_parquet(files,. Web i am trying to read a decently large parquet file (~2 gb with about ~30 million rows) into my jupyter notebook (in python 3) using the pandas read_parquet function. Df = pq_file.read_row_group(grp_idx, use_pandas_metadata=true).to_pandas() process(df) if you don't have control over creation of the parquet. Web in this article, i.

Python File Handling

Web i encountered a problem with runtime from my code. I have also installed the pyarrow and fastparquet libraries which the read_parquet. You can choose different parquet backends, and have the option of compression. Import dask.dataframe as dd from dask import delayed from fastparquet import parquetfile import glob files = glob.glob('data/*.parquet') @delayed def. Parameters path str, path object, file.

How to Read PDF or specific Page of a PDF file using Python Code by

Web read streaming batches from a parquet file. Web the parquet file is quite large (6m rows). Additionally, we will look at these file. If not none, only these columns will be read from the file. Web i am trying to read a decently large parquet file (~2 gb with about ~30 million rows) into my jupyter notebook (in python.

Python Read A File Line By Line Example Python Guides

Web import pandas as pd #import the pandas library parquet_file = 'location\to\file\example_pa.parquet' pd.read_parquet (parquet_file, engine='pyarrow') this is what the output. In our scenario, we can translate. Web configuration parquet is a columnar format that is supported by many other data processing systems. If not none, only these columns will be read from the file. Web i encountered a problem with.

python How to read parquet files directly from azure datalake without

In particular, you will learn how to: Web read streaming batches from a parquet file. Web how to read a 30g parquet file by python ask question asked 1 year, 11 months ago modified 1 year, 11 months ago viewed 530 times 1 i am trying to read data from a large parquet file of 30g. Additionally, we will look.

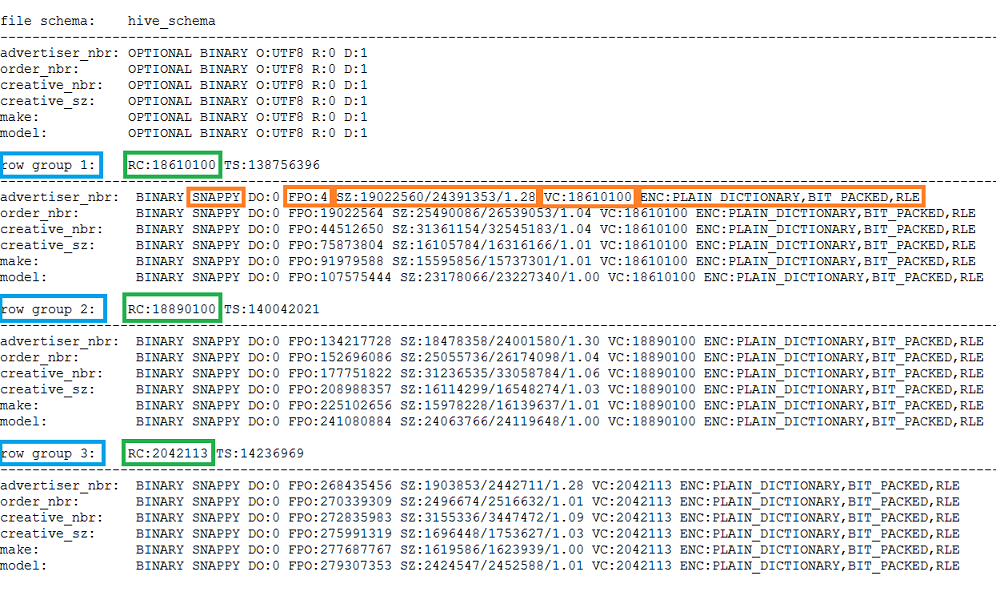

Big Data Made Easy Parquet tools utility

In our scenario, we can translate. Web in general, a python file object will have the worst read performance, while a string file path or an instance of nativefile (especially memory maps) will perform the best. Import dask.dataframe as dd from dask import delayed from fastparquet import parquetfile import glob files = glob.glob('data/*.parquet') @delayed def. Web the default io.parquet.engine behavior.

Understand predicate pushdown on row group level in Parquet with

Import pyarrow as pa import pyarrow.parquet as. Batches may be smaller if there aren’t enough rows in the file. Web in this article, i will demonstrate how to write data to parquet files in python using four different libraries: Additionally, we will look at these file. Import pyarrow.parquet as pq pq_file = pq.parquetfile(filename.parquet) n_groups = pq_file.num_row_groups for grp_idx in range(n_groups):

python Using Pyarrow to read parquet files written by Spark increases

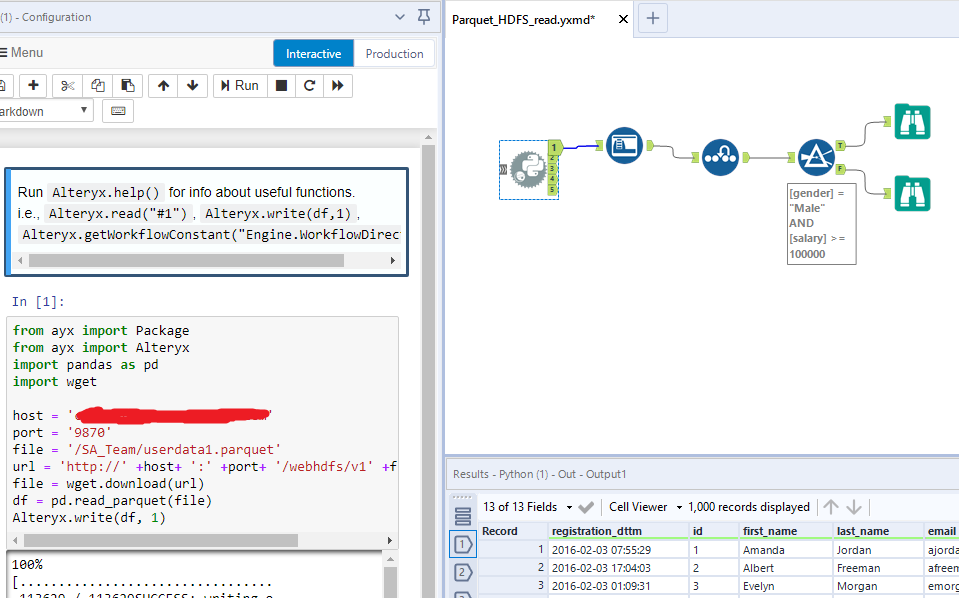

I have also installed the pyarrow and fastparquet libraries which the read_parquet. Web how to read a 30g parquet file by python ask question asked 1 year, 11 months ago modified 1 year, 11 months ago viewed 530 times 1 i am trying to read data from a large parquet file of 30g. Retrieve data from a database, convert it.

How to resolve Parquet File issue

Parameters path str, path object, file. In our scenario, we can translate. You can choose different parquet backends, and have the option of compression. Maximum number of records to yield per batch. Spark sql provides support for both reading and writing parquet files that automatically preserves the schema of the original data.

Parquet, will it Alteryx? Alteryx Community

Web i'm reading a larger number (100s to 1000s) of parquet files into a single dask dataframe (single machine, all local). Import pyarrow.parquet as pq pq_file = pq.parquetfile(filename.parquet) n_groups = pq_file.num_row_groups for grp_idx in range(n_groups): My memory do not support default reading with fastparquet in python, so i do not know what i should do to lower the memory usage.

Web Meta Is Releasing Two Versions Of Code Llama, One Geared Toward Producing Python Code And Another Optimized For Turning Natural Language Commands Into Code.

Pandas, fastparquet, pyarrow, and pyspark. Only these row groups will be read from the file. In our scenario, we can translate. Maximum number of records to yield per batch.

Web So You Can Read Multiple Parquet Files Like This:

In particular, you will learn how to: It is also making three sizes of. The task is, to upload about 120,000 of parquet files which is total of 20gb size in overall. Spark sql provides support for both reading and writing parquet files that automatically preserves the schema of the original data.

Web I'm Reading A Larger Number (100S To 1000S) Of Parquet Files Into A Single Dask Dataframe (Single Machine, All Local).

Import pyarrow.parquet as pq pq_file = pq.parquetfile(filename.parquet) n_groups = pq_file.num_row_groups for grp_idx in range(n_groups): Parameters path str, path object, file. Web how to read a 30g parquet file by python ask question asked 1 year, 11 months ago modified 1 year, 11 months ago viewed 530 times 1 i am trying to read data from a large parquet file of 30g. Web import dask.dataframe as dd import pandas as pd import numpy as np import torch from torch.utils.data import tensordataset, dataloader, iterabledataset, dataset # breakdown file raw_ddf = dd.read_parquet(data.parquet) # read huge file.

I Found Some Solutions To Read It, But It's Taking Almost 1Hour.

Import pandas as pd df = pd.read_parquet('path/to/the/parquet/files/directory') it concats everything into a single dataframe so you can convert it to a csv right after: Pickle, feather, parquet, and hdf5. I'm using dask and batch load concept to do parallelism. So read it using dask.