Pyspark Read From S3

Pyspark Read From S3 - Note that our.json file is a. Pyspark supports various file formats such as csv, json,. Web now that pyspark is set up, you can read the file from s3. Read the text file from s3. If you have access to the system that creates these files, the simplest way to approach. Web feb 1, 2021 the objective of this article is to build an understanding of basic read and write operations on amazon. Web this code snippet provides an example of reading parquet files located in s3 buckets on aws (amazon web services). Web if you need to read your files in s3 bucket you need only do few steps: Web and that’s it, we’re done! Web read csv from s3 as spark dataframe using pyspark (spark 2.4) ask question asked 3 years, 10 months ago.

Interface used to load a dataframe from external storage. Now, we can use the spark.read.text () function to read our text file: To read json file from amazon s3 and create a dataframe, you can use either. Web spark sql provides spark.read.csv (path) to read a csv file from amazon s3, local file system, hdfs, and many other data. Web now that pyspark is set up, you can read the file from s3. If you have access to the system that creates these files, the simplest way to approach. Web spark read json file from amazon s3. It’s time to get our.json data! Web feb 1, 2021 the objective of this article is to build an understanding of basic read and write operations on amazon. Web this code snippet provides an example of reading parquet files located in s3 buckets on aws (amazon web services).

Now that we understand the benefits of. Web spark read json file from amazon s3. Web to read data on s3 to a local pyspark dataframe using temporary security credentials, you need to: Web feb 1, 2021 the objective of this article is to build an understanding of basic read and write operations on amazon. Interface used to load a dataframe from external storage. To read json file from amazon s3 and create a dataframe, you can use either. Pyspark supports various file formats such as csv, json,. If you have access to the system that creates these files, the simplest way to approach. Interface used to load a dataframe from external storage. Web this code snippet provides an example of reading parquet files located in s3 buckets on aws (amazon web services).

PySpark Create DataFrame with Examples Spark by {Examples}

Web if you need to read your files in s3 bucket you need only do few steps: Web to read data on s3 to a local pyspark dataframe using temporary security credentials, you need to: Read the text file from s3. It’s time to get our.json data! Web how to access s3 from pyspark apr 22, 2019 running pyspark i.

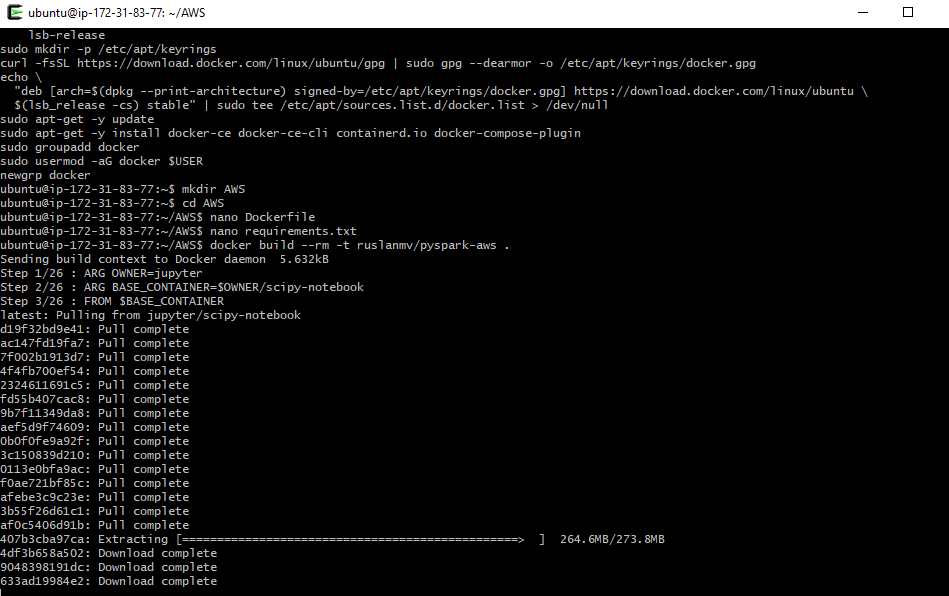

How to read and write files from S3 bucket with PySpark in a Docker

Note that our.json file is a. Interface used to load a dataframe from external storage. Web this code snippet provides an example of reading parquet files located in s3 buckets on aws (amazon web services). Web to read data on s3 to a local pyspark dataframe using temporary security credentials, you need to: Read the data from s3 to local.

apache spark PySpark How to read back a Bucketed table written to S3

It’s time to get our.json data! Web and that’s it, we’re done! Read the data from s3 to local pyspark dataframe. Web to read data on s3 to a local pyspark dataframe using temporary security credentials, you need to: Now, we can use the spark.read.text () function to read our text file:

Spark SQL Architecture Sql, Spark, Apache spark

Web this code snippet provides an example of reading parquet files located in s3 buckets on aws (amazon web services). Web if you need to read your files in s3 bucket you need only do few steps: We can finally load in our data from s3 into a spark dataframe, as below. Interface used to load a dataframe from external.

PySpark Read CSV Muliple Options for Reading and Writing Data Frame

Web spark read json file from amazon s3. It’s time to get our.json data! Web how to access s3 from pyspark apr 22, 2019 running pyspark i assume that you have installed pyspak. If you have access to the system that creates these files, the simplest way to approach. Web step 1 first, we need to make sure the hadoop.

Array Pyspark? The 15 New Answer

It’s time to get our.json data! Now that we understand the benefits of. If you have access to the system that creates these files, the simplest way to approach. Note that our.json file is a. To read json file from amazon s3 and create a dataframe, you can use either.

PySpark Read JSON file into DataFrame Cooding Dessign

Web and that’s it, we’re done! We can finally load in our data from s3 into a spark dataframe, as below. Web read csv from s3 as spark dataframe using pyspark (spark 2.4) ask question asked 3 years, 10 months ago. Note that our.json file is a. Now that we understand the benefits of.

PySpark Tutorial24 How Spark read and writes the data on AWS S3

If you have access to the system that creates these files, the simplest way to approach. Web this code snippet provides an example of reading parquet files located in s3 buckets on aws (amazon web services). Web if you need to read your files in s3 bucket you need only do few steps: Web now that pyspark is set up,.

How to read and write files from S3 bucket with PySpark in a Docker

It’s time to get our.json data! Web this code snippet provides an example of reading parquet files located in s3 buckets on aws (amazon web services). Interface used to load a dataframe from external storage. Read the data from s3 to local pyspark dataframe. Now that we understand the benefits of.

Read files from Google Cloud Storage Bucket using local PySpark and

We can finally load in our data from s3 into a spark dataframe, as below. Interface used to load a dataframe from external storage. Web now that pyspark is set up, you can read the file from s3. Pyspark supports various file formats such as csv, json,. Web read csv from s3 as spark dataframe using pyspark (spark 2.4) ask.

Web Step 1 First, We Need To Make Sure The Hadoop Aws Package Is Available When We Load Spark:

Web if you need to read your files in s3 bucket you need only do few steps: If you have access to the system that creates these files, the simplest way to approach. Web now that pyspark is set up, you can read the file from s3. Read the data from s3 to local pyspark dataframe.

Web Spark Read Json File From Amazon S3.

Web feb 1, 2021 the objective of this article is to build an understanding of basic read and write operations on amazon. It’s time to get our.json data! To read json file from amazon s3 and create a dataframe, you can use either. Now that we understand the benefits of.

Web To Read Data On S3 To A Local Pyspark Dataframe Using Temporary Security Credentials, You Need To:

Read the text file from s3. Web read csv from s3 as spark dataframe using pyspark (spark 2.4) ask question asked 3 years, 10 months ago. Now, we can use the spark.read.text () function to read our text file: Web and that’s it, we’re done!

Note That Our.json File Is A.

Web how to access s3 from pyspark apr 22, 2019 running pyspark i assume that you have installed pyspak. Pyspark supports various file formats such as csv, json,. Interface used to load a dataframe from external storage. Interface used to load a dataframe from external storage.